If you’ve asked yourself that as a SOC analyst, you’re not alone. While the short answer is no, you might be outpaced by a human analyst who is using AI to move faster, scale smarter, and get promoted while you’re still buried in alerts.

According to the World Economic Forum, 71% of chief risk officers expect severe organizational disruption due to cyber risks in the current threat landscape. The stakes have never been higher as is the demand for AI-literate security professionals. Analysts who know how to wield AI are pulling in 30%+ higher salaries and being tapped for leadership roles.

Bottom line: The future of SecOps is AI-powered, and getting ahead now isn’t optional. It’s strategic. Adopt AI smartly, and you’re not just protecting your organization. You’re securing your own career trajectory.

How to jumpstart your AI adoption as a SOC analyst

You don’t need to become a machine learning engineer overnight to adopt AI. No one’s expecting you to train LLMs from scratch as a SOC analyst. But if you’re still doing everything manually? You’re working against the clock, and the tide.

AI is redefining what it means to be an analyst. Here’s how to get started with AI tools like ChatGPT.

1. Start with easy wins for your everyday workflows

You can use AI to review and correlate logs so you can easily identify malicious activity and where it’s coming from even if you are not a log analysis expert. In this example we upload AWS WAF and Edge server access logs to ChatGPT using this prompt to review the logs and summarize its findings:

Help me analyze and correlate these files for malicious activity. Provide a high level summary for a SOC analyst that is not familiar with AWS WAF or Edger server logs. Provide a drill-down into specific IPs, timestamps, or URIs associated with the malicious activity so we can make sure these are being blocked.Here is what it looks like in ChatGPT.

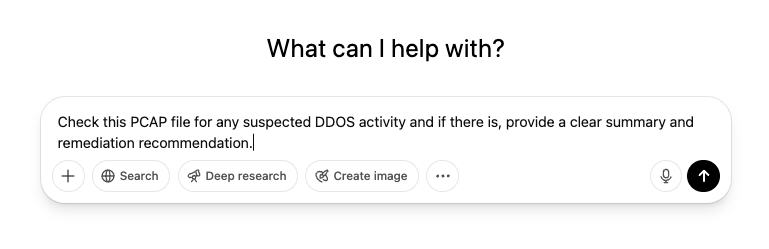

Converting PCAP into CSV then asking for anomaly detection or DDOS‑style traffic breakdowns is also super cool. You can use the following prompt when you upload your PCAP file to ChatGPT (or other AI tool of choice).

Check this PCAP file for any suspected DDOS activity and if there is, provide a clear summary and remediation recommendation.

NOTE: You cannot parse PCAP files directly in ChatGPT. They must first be converted to CSV.

You can also shave off hours from your daily investigations by feeding AI with your existing playbooks. It can run through all the desired steps on the data you provide and then deliver an assessment of where to focus your attention.

NOTE: You might need to chunk it out so the AI can process the investigative questions in a timely and focused fashion.

Let’s look at another ChatGPT prompt shared by a CISO of a leading financial institution which is full of great guidelines.

You can use it as a template and tweak for your environment:

Context:

I’m a seasoned cybersecurity expert with over 20 years in IR, forensics, and threat hunting.

Instructions:

I will upload one or more log files or PCAP‑converted CSVs (firewall, IDS/IPS, auth, endpoint, Windows Event, etc.).

Your mission:

1. Detect malicious/suspicious activity (compromised hosts, IOCs, anomalous patterns, privilege escalation, lateral movement, or persistence).

2. Summarize findings for technical and non‑technical audiences.

3. Demonstrate your investigative reasoning, map activity to MITRE ATT&CK tactics, use chain-of-events logic and contextual analysis.

4. Recommend containment & hardening steps.

Guidelines:

If no files are uploaded, prompt the user to upload log files.

If input is unclear, ask clarifying questions.

Do not guess; ground all conclusions in observable data.

Be exhaustive in analysis. Focus on operational relevance and accuracy.2. Upskill with AI best practices

Your career opportunities and impact as a SOC analyst, or even SOC manager, multiply exponentially when you can guide AI tools effectively with both prompts and processes.

Some must-know battle-tested best practices include:

-

- Respect context windows: Stick to ~2,000 lines per query to avoid model confusion. Gemini and larger Llama 4 variants can handle more, but always chunk if you hit limits.

-

- Chunk large inputs: Break your data set into logical pieces—analyze part 1, then part 2, then summarize the overall narrative.

-

- Leverage playbook‑driven prompts: Feed AI one playbook phase at a time, then ask it to synthesize an overall incident narrative.

-

- Protect sensitive data: Never upload PII or internal IP ranges to public models. With ChatGPT Enterprise or private LLMs, disable data training to safeguard your organization’s IP.

These practices help align general AI with your SOC, turning it into a force multiplier that can keep on track with your team’s workflow.

3. Become the AI advocate in your team

Be the one who knows what’s possible with AI and what isn’t. Share wins, teach others, and build your brand as someone who drives real results with smart tech.

When you lead by example, you don’t just elevate your own career, you raise the bar for your entire team.

DIY AI is just the starting point for modern SOCs

Individual wins like the ones shared above are great, but scaling AI across the SOC is the real game changer.

Picture this: A ransomware campaign slips past initial detection. While your team manually correlates alerts from siloed tools, the attackers already exfiltrated the data.

This is today’s reality for SOCs:

-

- Thousands of daily alerts make proper triage unmanageable and lead to almost certain burnout.

-

- Gaps in coverage are impossible to fill, with 67% of SOCs reporting a staffing shortage in 2024.

-

- And, the increased complexity of cyberattacks means that SOCs need to deal with AI-enhanced cyberattacks that evade even advanced detection.

Without an integrated AI SOC solution, you will have a real math problem. As alerts often require 30+ minutes of investigation and arrives ever 20 seconds, there are just not enough human SOC analysts in the world to tackle all these potential threats.

And you can’t “DIY” your way out of this problem with ChatGPT hacks alone. These DIY AI efforts fall short because:

-

- Public AI services can’t meet your compliance requirements, so you still have to manually handle sensitive data and compliance concerns.

-

- There’s no seamless integration with your existing detection tools, sensors or SIEM, so AI services cannot access your environment. Certainly not in a scalable manner.

-

- Plus, you’re not using security-native AI models trained on your kind of data.

What you need in your SOC is a purpose-built AI security platform that can handle scale, speed, and compliance—all in real time.

Welcome to the AI-powered SOC

Enter a new era where you are part of an AI-powered SOC that empowers you as an analyst: Shift from doing all the heavy lifting to reviewing incident summaries and recommended remediation actions.

Let’s be clear: AI cannot replace you as a human analyst. Security requires creativity, intuition, and business context—these are human traits that AI simply can’t replicate.

This is how AI-powered SOCs solutions like Radiant Security step in—not to replace you, but to help you do what you do best, better.

How does Radiant’s AI-powered SOC work?

Radiant’s AI goes beyond pattern matching to understand context across your full tech stack:

-

- The AI analyzes alerts with all available telemetry data from your environment so that it can understand full context and accurately assess whether an alert is truly malicious.

-

- Based on this contextual understanding, the platform provides next-step suggestions for incidents.

-

- As an analyst, you maintain control by reviewing these recommendations—modify them, ignore them or implement them in one click.

-

- As trust builds, like most Radiant customers, you can fully automate recommended response actions.

With Radiant, all alerts are auto-triaged. You can spot-check as needed, and focus on real threats, at scale. And all this happens within a legally compliant, PII-respecting framework where you can trust the safety of your data.

How AI changes the SOC analyst role—for the better

With an AI-powered SOC, you’re no longer stuck in reactive mode—you’re operating with foresight. And, let’s be honest: Nobody got into cybersecurity to spend their days clicking through alerts or triaging the same false positives over and over. You want to track down lateral movement, spot novel exploits, reverse-engineer malware—you know, the cool stuff that actually stops attackers in their tracks.

AI unlocks this opportunity for you, automating the mundane so you can step into higher-impact territory:

-

- For Tier 1 analysts: AI filters out the noise, scores alerts based on real-time risk, and enriches them before they even hit your screen. Instead of being overwhelmed by volume, you’re focused on the threats that actually matter.

-

- For Tier 2 analysts: AI correlates events across tools and timelines, stitching together narratives that would’ve taken hours to build manually. This means you can focus on validating conclusions, refining insights, and uncovering the outliers AI might miss.

-

- For Tier 3 analysts: AI works with you to surface what’s interesting in the cyber landscape by pulling in threat intel. It flags behavioral anomalies and gives you a head start in identifying emerging attack patterns or zero-days before they hit the news.

Adopting AI in your SOC doesn’t mean less security. It’s about smarter security—and actually having time to think, strategize, and stay ahead of threats instead of playing catch-up.

The AI adoption gap: Why some SOC teams fall behind

The advent of AI-powered SOCs has created a clear divide in the security operations landscape:

-

- Forward-thinking SOCs use AI to handle up to 90% of routine tasks, allowing analysts to focus on high-impact security work.

-

- Traditional SOCs continue struggling with alert backlogs and staff burnout, falling further behind with each passing month.

So why do some SOCs get stuck in the old ways? Let’s see the most common objections to AI adoption in a SOC and how to address them with Radiant’s AI-powered SOC:

-

- Leadership lacks trust in AI: Decision-makers hesitate due to misconceptions about AI reliability.

“I’ll lose control of my environment.” → Nope. Radiant’s AI suggests, you decide.

“We can’t trust AI with sensitive data.” → Radiant provides compliance and data protection by design.

-

- Legacy systems and siloed data: Fragmented infrastructure prevents effective AI implementation.

“Our systems are too legacy.” → Radiant integrates with what you’ve got.

-

- Skills gap: Teams lack the expertise to effectively adopt AI despite available tools.

“Our team doesn’t have the AI skills.” → Radiant is designed for intuitive use. You just need curiosity and some basic training.

-

- Budget constraints: Organizations prioritize reactive measures over strategic AI investments.

“The ROI isn’t clear enough.” → What is the cost of not automating when alert volume grows year over year and analysts are hard to find? Additionally our comprehensive log management capabilities (included with no extra cost) can potentially replace expensive SIEMs, thereby freeing up budget for AI-powered SOC.

-

- Compliance challenges: Regulatory concerns slow down AI adoption in sensitive environments.

“Compliance is too complicated with third-party vendors.” → Radiant can help you break through the security and compliance gridlock.

Moving forward: Future-proof your career and SOC

AI isn’t coming for your job—but it is coming to transform it into something more strategic, impactful, and sustainable. Who will be the real winners? Anyone who understands how to leverage AI before everyone else.

For your SOC to succeed, you don’t just need AI tools—you need to transform your SOC so that it’s built for AI. Radiant Security helps you do exactly that by designing AI security that enhances your human element.

Need some evidence? Just look at how Kyowa Kirin reduced mean time to respond (MTTR) from days to hours with Radiant Security. Ready to future-proof your SOC with AI—and your role in it? Learn more about Radiant Security, or book a demo to see the power of AI SecOps in action.

Back